Red teaming is a proactive security practice where a team of experts, often called ethical hackers, simulate real-world cyberattacks to test how well an organisation can defend itself. The goal is to identify weaknesses across systems, processes, and employee awareness before a real attacker can exploit them.

Originally developed by military organisations like the Central Intelligence Agency (CIA) to test battlefield strategies, red teaming has become essential in modern cybersecurity. It helps businesses across industries, such as law firms, financial services, insurance companies, and real estate agencies, strengthen their defences by exposing vulnerabilities and improving security measures.

Real-World Applications of Red Teaming

The rise in cyber threats has made proactive security strategies essential for businesses across Australia. According to the Australian Cyber Security Centre (ACSC), cybercrime reports increased by 13%, with over 76,000 incidents recorded in the latest annual report. Small businesses alone faced an average financial loss of nearly $50,000 per cyber incident.

Similarly, the Office of the Australian Information Commissioner (OAIC) reported that data breach notifications in the first half of 2024 were at their highest in over three years. These statistics underscore the importance of proactive threat simulations, such as red teaming, which has proven highly effective in identifying vulnerabilities before they can be exploited.

Real-world applications demonstrate how organisations across industries can strengthen their security posture by simulating adversarial tactics.

Examples of Successful Red Teaming Engagements

1. Financial Institution Uncovers Security Weaknesses

A major Australian financial institution conducted a red teaming exercise to assess how effectively it could defend against tactics used by advanced threat actors. The simulated attack revealed significant vulnerabilities, including unauthorised access to sensitive financial data and potential pathways for illicit financial transactions.

The proactive engagement resulted in the organisation implementing stronger access controls, enhancing multi-factor authentication, and improving threat detection protocols across critical systems.

2. Organisation Identifies Systemic Vulnerabilities

A red teaming assessment for a national organisation with complex digital infrastructure uncovered critical weaknesses across interconnected systems. The simulated attack tested both internal security measures and third-party integrations, revealing exploitable gaps in supply chain security and data access policies.

Following the assessment, the organisation adopted a holistic managed cybersecurity framework, incorporating regular penetration tests, employee security awareness programs, and stricter vendor management protocols to mitigate risks associated with evolving cyber threats.

3. Financial Services Firm Strengthens Security Controls

A mid-sized financial services company engaged in a red teaming engagement designed to simulate a full-scale cyberattack. The test exposed issues in its endpoint detection and response (EDR) systems and revealed gaps in its incident response process, particularly around data exfiltration detection.

Based on the red team’s findings, the organisation revamped its security incident response playbook, implemented advanced EDR tools, and enhanced SIEM (Security Information and Event Management) integration for faster threat visibility and response.

Red Teaming in AI

Red teaming in AI involves proactively stress-testing machine learning models and data pipelines to identify vulnerabilities, biases, and potential model manipulation before they can be exploited or cause harm.

Unique Challenges and Approaches for AI Systems

AI security presents unique challenges not commonly seen in traditional IT infrastructure:

- Continuous Learning Loops: Machine learning models evolve over time, requiring continuous testing rather than static assessments.

- Adversarial Attacks: Attackers may use adversarial inputs, such as subtly altered data, to deceive AI models into making incorrect predictions.

- Black-Box Complexity: Many AI models operate as opaque systems, making it difficult to assess decision-making processes and outputs.

Approaches to AI Red Teaming:

- Adversarial Testing: Injecting manipulated inputs to test how the model handles deceptive data.

- Bias and Fairness Audits: Identifying discriminatory patterns within datasets and ensuring diverse data representation.

- Data Integrity Testing: Assessing how corrupted data affects model performance and decision accuracy.

Ethical Considerations in AI Red Teaming

While stress-testing AI systems is essential for security and fairness, ethical considerations must guide these practices:

- Transparency: Organisations should clearly document methodologies, findings, and testing parameters.

- Data Privacy: Ensuring personal or sensitive data used during testing is anonymised and handled ethically.

- Accountability: Assigning responsibility for addressing identified security gaps and biases.

- Fairness: Avoiding the reinforcement of discriminatory patterns during testing.

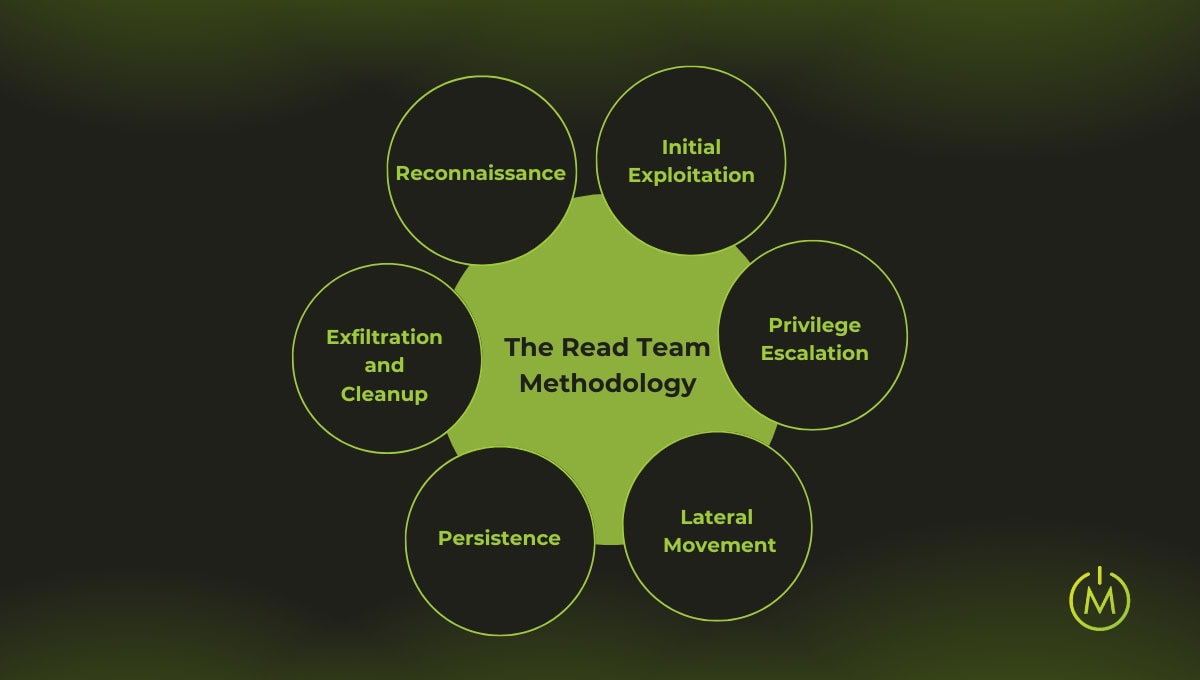

The Red Teaming Process: Phases Explained

Red teaming is a structured, multi-phase process designed to simulate a real-world cyberattack, helping organisations identify vulnerabilities and strengthen their defences. Each phase plays an important role in uncovering security gaps while minimising disruption to operations.

Phase 1: Reconnaissance

The red team begins by gathering intelligence about the target organisation, often using open-source intelligence (OSINT) tools and passive scanning techniques. The goal is to collect information such as:

- Network architecture and public-facing assets

- Employee roles and contact details

- Software versions and technologies in use

Phase 2: Initial Exploitation

Once vulnerabilities are identified, the red team attempts to exploit them to gain initial access. This phase may involve:

- Phishing campaigns to trick employees into revealing credentials

- Exploiting unpatched software vulnerabilities

- Brute force attacks on weak passwords

Phase 3: Privilege Escalation

After gaining initial access, the next objective is to elevate privileges within the compromised system. The red team attempts to:

- Exploit misconfigured permissions

- Leverage outdated software for privilege escalation

- Crack administrative credentials

Phase 4: Lateral Movement

With elevated privileges, the red team attempts to move deeper into the network security by accessing other systems and sensitive data repositories. Techniques include:

- Exploiting shared drives and misconfigured remote desktop protocols (RDP)

- Using compromised credentials to access additional servers

- Pivoting through the network to reach high-value targets

Phase 5: Persistence

In this phase, the red team focuses on maintaining long-term access to the compromised network. The goal is to test whether an attacker could remain undetected over time. Common tactics include:

- Creating hidden accounts for future access

- Planting backdoors or remote access tools (RATs)

- Modifying system configurations to resist detection

Phase 6: Exfiltration and Cleanup

The final phase involves simulating the extraction of sensitive data while covering tracks to avoid detection. Activities may include:

- Encrypting and transferring mock data

- Deleting system logs to erase traces of activity

- Restoring compromised systems to their original state

Tools and Techniques Used in Red Teaming Engagements

Each phase of the red teaming process simulates how real-world attackers progress through a network security audit, from reconnaissance to data exfiltration. To effectively mimic such threats, red teams rely on specialised tools and techniques that test both technical controls and human defences across multiple security layers.

Social Engineering

Social engineering targets human vulnerabilities rather than technical flaws. Red teams use techniques like:

- Email Phishing: Crafting deceptive emails to trick employees into revealing credentials or clicking malicious links.

- Pretexting: Creating false scenarios to manipulate individuals into sharing sensitive information.

- Vishing and Smishing: Voice and SMS phishing tactics targeting personal devices.

Physical Security Testing

Physical security is often overlooked in cybersecurity strategies. Red teams assess it by:

- Tailgating: Gaining access to secure areas by following authorised personnel.

- Bypassing Access Controls: Exploiting weaknesses in locks, keycards, or biometric scanners.

- Device Planting: Introducing rogue devices like keyloggers or network sniffers into the physical workspace.

Application Penetration Testing

Application security is critical for businesses using web platforms and custom software. Red teaming tests involve:

- SQL Injection (SQLi): Exploiting poor input validation to access databases.

- Cross-Site Scripting (XSS): Injecting malicious scripts into web applications.

- API Testing: Identifying vulnerabilities in application programming interfaces (APIs).

Network Sniffing

Network sniffing involves monitoring and capturing data packets to detect vulnerabilities in network traffic. Techniques include:

- Packet Sniffing Tools: Tools like Wireshark to intercept unencrypted data.

- Man-in-the-Middle (MITM) Attacks: Intercepting communication between systems.

- Wireless Traffic Analysis: Exploiting weak Wi-Fi encryption standards.

Tainting Shared Content

This technique involves introducing malicious elements into shared resources, such as:

- Compromised Documents: Embedding malware in commonly used files like PDFs or Excel sheets.

- Cloud Storage Attacks: Uploading infected files to shared drives.

- Collaboration Platform Exploits: Leveraging tools like Microsoft Teams or Slack for payload delivery.

Brute Forcing Credentials

Brute forcing involves attempting to crack weak or reused passwords through automation. Techniques include:

- Dictionary Attacks: Using lists of common passwords.

- Credential Stuffing: Exploiting reused credentials from previous breaches.

- Password Spraying: Testing a few common passwords across multiple accounts.

Continuous Automated Red Teaming (CART)

CART leverages automation for continuous security testing, allowing businesses to:

- Simulate Threats Regularly: Conducting ongoing assessments instead of periodic tests.

- Automate Reconnaissance: Continuously scanning for vulnerabilities.

- Integrate with SIEM Tools: Feeding results into security information and event management systems for proactive defence.

Benefits of Red Teaming

It goes beyond standard penetration testing, providing deeper insights into an organisation’s overall security posture.

Identifying and Prioritising Security Risks

It helps organisations uncover hidden vulnerabilities across their digital and physical infrastructure. By simulating realistic attack scenarios, businesses can:

- Detect misconfigurations, outdated software, and weak access controls.

- Assess employee susceptibility to phishing and social engineering tactics.

- Highlight gaps in incident response and detection mechanisms.

Optimising Resource Allocation for Upgrades

Understanding security gaps allows organisations to make informed decisions about where to invest their cybersecurity resources. Red teaming supports:

- Strategic Budgeting: Directing funds toward the most impactful security upgrades.

- Technology Evaluation: Validating the effectiveness of existing security tools.

- Efficiency Improvements: Reducing redundant technologies while focusing on critical defences.

Gaining Insights from an Attacker’s Perspective

A core advantage of red teaming is simulating the mindset and strategies of a real-world adversary. This approach helps organisations:

- Understand how an attacker might approach and exploit their systems.

- Identify unconventional attack vectors often missed by automated testing.

- Develop more effective defensive strategies based on real-world tactics and techniques.

Red Teaming vs. Other Security Approaches

Red teaming is often compared to other cybersecurity strategies, but each approach serves a distinct purpose in strengthening an organisation’s security posture.

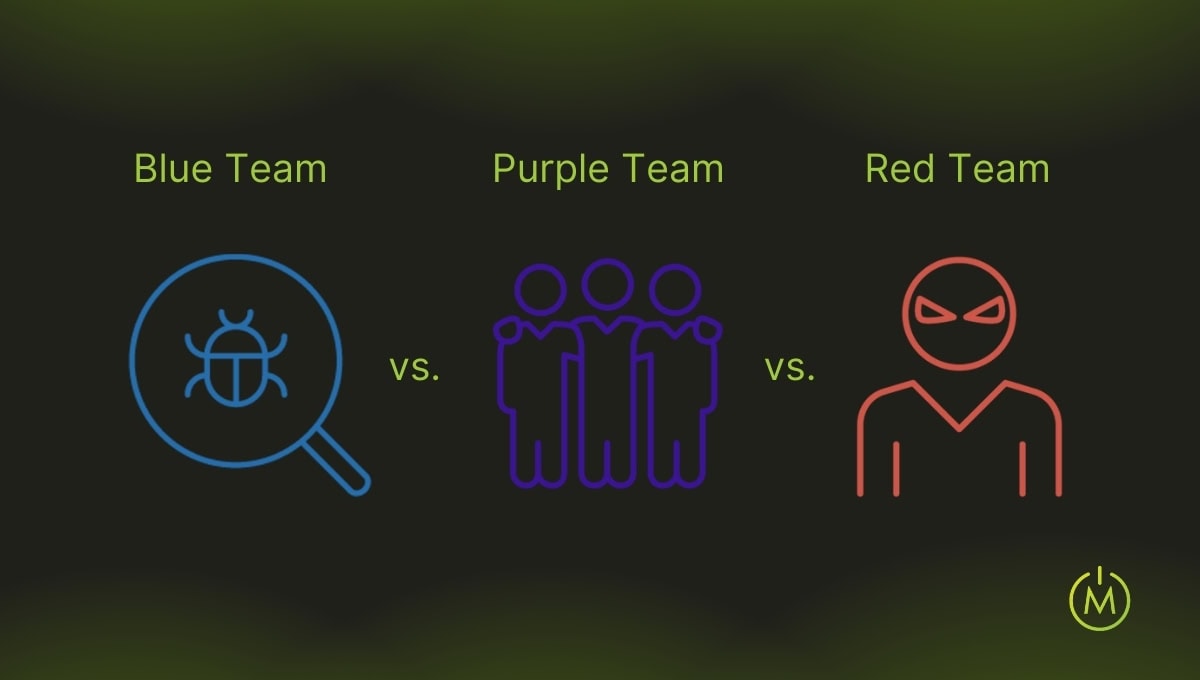

Red Teaming vs. Blue Teaming vs. Purple Teaming

These three security strategies work collaboratively but focus on different aspects of cybersecurity testing and defence:

- Red Teaming: Offensive security testing where a team simulates real-world attack scenarios to identify vulnerabilities and test the organisation’s detection and response capabilities.

- Blue Teaming: Defensive security operations where the team monitors, detects, and responds to threats in real time. Blue teams focus on protecting the organisation’s systems from actual or simulated attacks.

- Purple Teaming: A collaborative approach where red and blue teams work together, sharing insights to improve both offensive and defensive strategies. This method helps blue teams learn from simulated attacks while red teams better understand detection strategies.

Key Difference:

- Red teaming focuses on discovering vulnerabilities, while blue teaming prioritises defence. Purple teaming bridges the gap, ensuring both sides collaborate for continuous improvement.

Red Teaming vs. Penetration Testing

While both red teaming and penetration testing assess an organisation’s security posture, their scope and objectives differ significantly:

Aspect | Penetration Testing | Red Teaming |

Definition | A controlled, focused assessment targeting specific technical vulnerabilities in a system or application. | A broader, strategic simulation of a full-scale cyberattack across multiple vectors (human, physical, technical). |

Scope | Targets specific systems or applications. | Evaluates the entire organisational security posture. |

Approach | Point-in-time assessment. | Simulates ongoing and evolving threats. |

Goal | Identify and report technical vulnerabilities. | Assess vulnerabilities while testing detection and response capabilities. |

Key Focus Areas | Technical vulnerabilities in a limited scope. | Holistic testing, including people, processes, and technology. |

Duration | Short-term, often a few days to weeks. | Long-term, often weeks to months. |

When to Use | Routine security assessments, compliance checks, validating new systems. | Comprehensive security audits, incident response testing, and evaluating defence maturity in high-risk environments. |

Ideal For | Checking system-specific weaknesses and regulatory compliance. | Testing overall organisational resilience and advanced threat detection. |

Preparing for a Red Teaming Assessment

The effective processing is aligned with business needs, and provides actionable insights for improving security posture.

Questions to Ask Before Initiating an Assessment

Before engaging in a red teaming exercise, organisations should clarify their objectives by asking key questions:

- What assets need protection? (e.g., client data, financial records, intellectual property)

- What are the most critical threats to the organisation?

- Which systems and processes will be in scope?

- Are there compliance or regulatory considerations?

- What is the desired outcome from this assessment?

Setting Clear Goals and Objectives

To maximise the value of a red teaming assessment, organisations should establish specific, measurable objectives. Common goals include:

- Identifying vulnerabilities: Testing for security gaps across technical, human, and physical layers.

- Evaluating detection and response: Assessing how well security teams detect and respond to simulated threats.

- Testing employee readiness: Gauging staff awareness through simulated phishing and social engineering attacks.

- Improving security posture: Identifying areas for long-term improvement and policy adjustments.

Ensuring Alignment with Organisational Priorities

A red teaming assessment should align with the organisation’s broader security and business objectives. Key steps include:

- Involving Leadership: Engage decision-makers to ensure the assessment supports business continuity and risk management strategies.

- Prioritising Critical Assets: Focus on the most sensitive systems and data relevant to the organisation’s operations.

- Balancing Security and Operations: Ensure testing minimises disruption to daily business processes.

- Integrating with Existing Security Programs: Red teaming should complement other initiatives like vulnerability management and penetration testing.

Conclusion

Red teaming has become a critical component of modern cybersecurity, offering organisations a proactive approach to identifying vulnerabilities across digital, physical, and human security layers. When combined with managed IT services, it ensures continuous monitoring, optimized resource allocation, and enhanced defense against evolving cyber threats. Implementing a well-structured red team assessment can help organisations strengthen their defences, optimise resource allocation, and stay resilient against evolving cyber threats.

Ready to fortify your security posture? Contact Matrix Solutions today for expert-led red teaming services tailored for your industry.

FAQs on Advanced Concepts in Red Teaming

What is a CIA Red Team?

A CIA Red Team refers to the specialised red teaming unit within the Central Intelligence Agency (CIA). This elite team was established to challenge assumptions, test security strategies, and identify blind spots within intelligence operations.

What Companies Are Leading in AI Red Teaming?

AI red teaming has gained prominence as businesses adopt AI-driven systems that require rigorous security testing. Several companies are leading the way in AI red teaming by offering specialised services and technologies:

- Microsoft: Known for its AI red teaming practices focused on stress-testing large language models (LLMs) and Azure AI platforms against adversarial threats.

- Google DeepMind: Actively involved in AI security testing and ethical assessments for machine learning models.

- OpenAI: Pioneering efforts in testing and securing generative AI models against prompt injection, data leakage, and adversarial manipulation.

- Trail of Bits: A cybersecurity firm specialising in AI model security, focusing on vulnerabilities in machine learning systems.